How elastic is your network traffic?

▼ How much bandwidth do I need? Always a hard question. It gets harder as you use more network links, and have to start considering what happens when one or more links fail, leaving you with reduced bandwidth.

The simple way to determine how much total bandwidth you need is to make a guess, and then adjust until the peaks in your bandwidth graphs stay below the 100% line. The more complex answer is that it depends on the bandwidth elasticity of the applications that generate your network traffic.

Applications are bandwidth elastic (sometimes known as "TCP friendly") when they adapt how much data they send to available bandwidth. They're inelastic when they keep sending the same amount of data even though the network can't handle that amount of data. Let's look at a few examples in more detail.

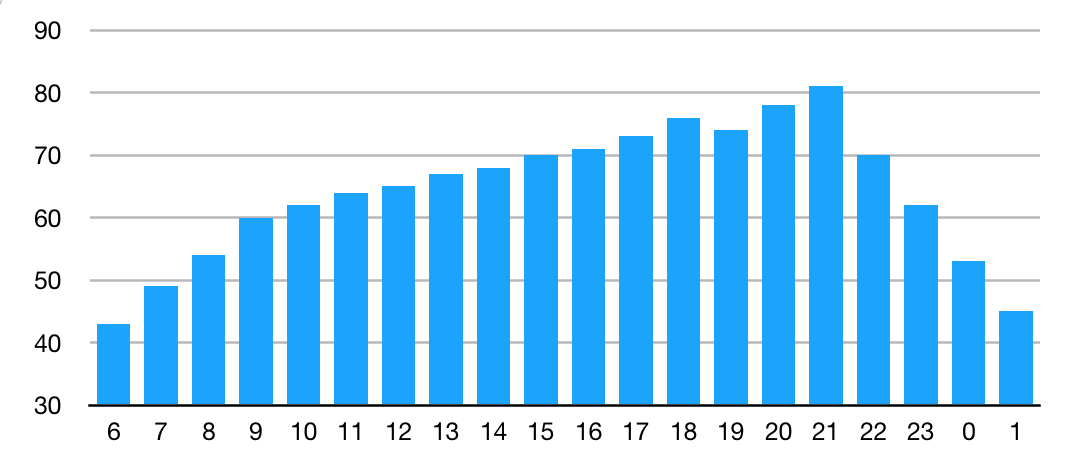

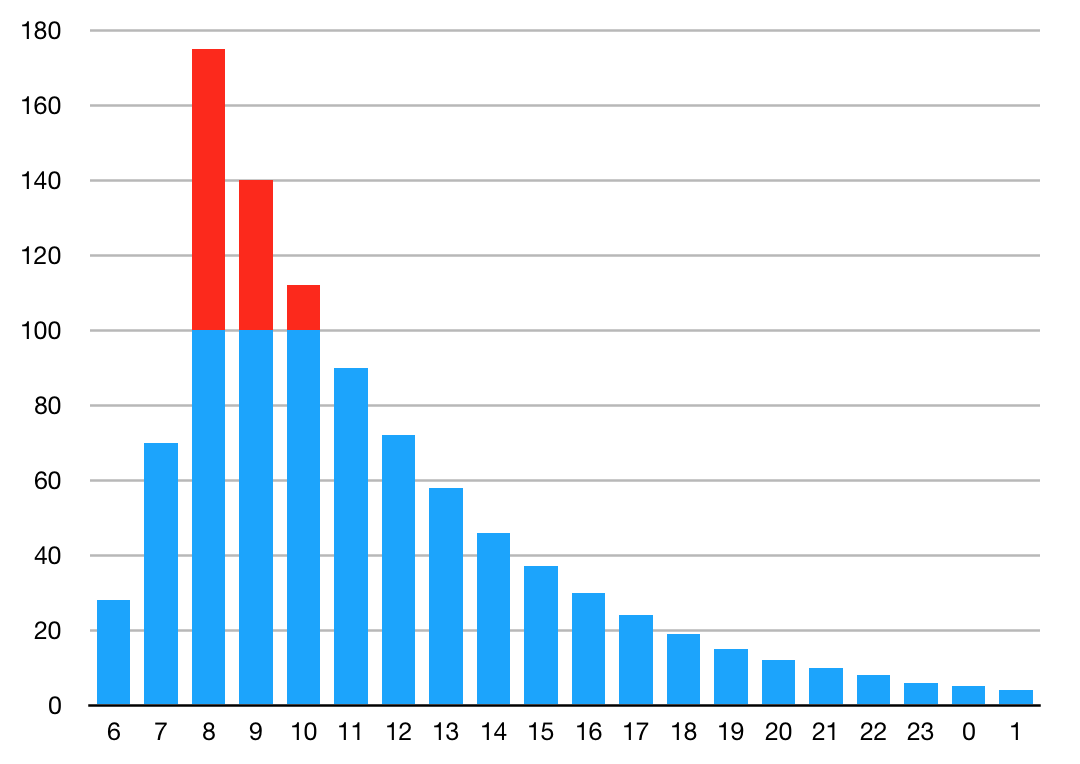

I'm assuming the bandwidth need throughout the day shown in this graph:

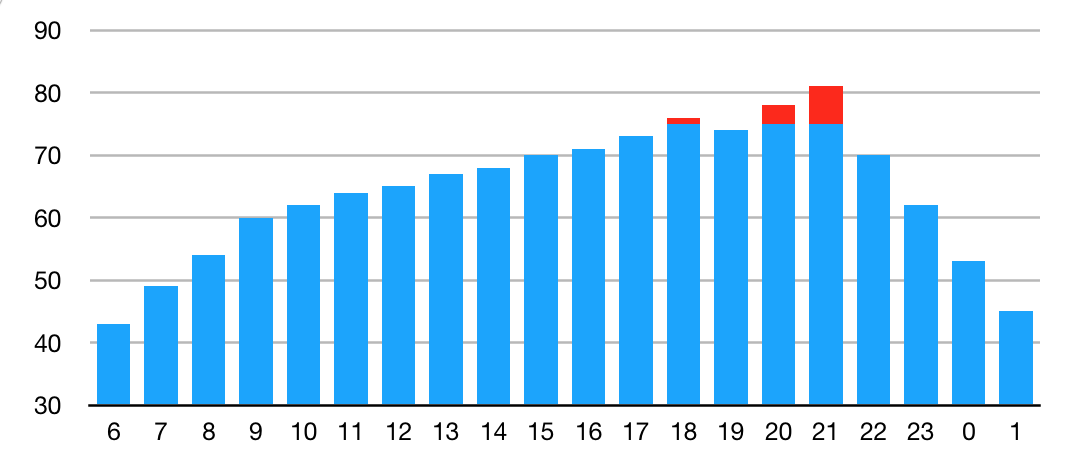

Between 21:00 and 22:00, normal bandwidth use reaches a peak of just over 80% of available capacity. But now we lose 25% of our bandwidth, so we have a higher bandwidth need than we can accommodate between 18:00 - 19:00 and 20:00 - 22:00, shown in red below:

Let's look at the behavior of applications with different bandwidth elasticity.

VoIP

Voice over IP is the classic example of an application with inelastic bandwidth needs. VoIP doesn't need much bandwidth, but it really needs what it needs. If bandwidth is insufficient, this may lead to slower delivery of some packets due to buffering (jitter), which is very undesirable. When packets get lost, this shows up as a hickup in the audio, also not a good thing. The VoIP application typically won't slow down its transmission rate, so the problem gets worse as the number of calls increase, until the users give up in frustration.

This graph shows that the three hours where more bandwidth is required than available, the user experience suffers:

File transfer

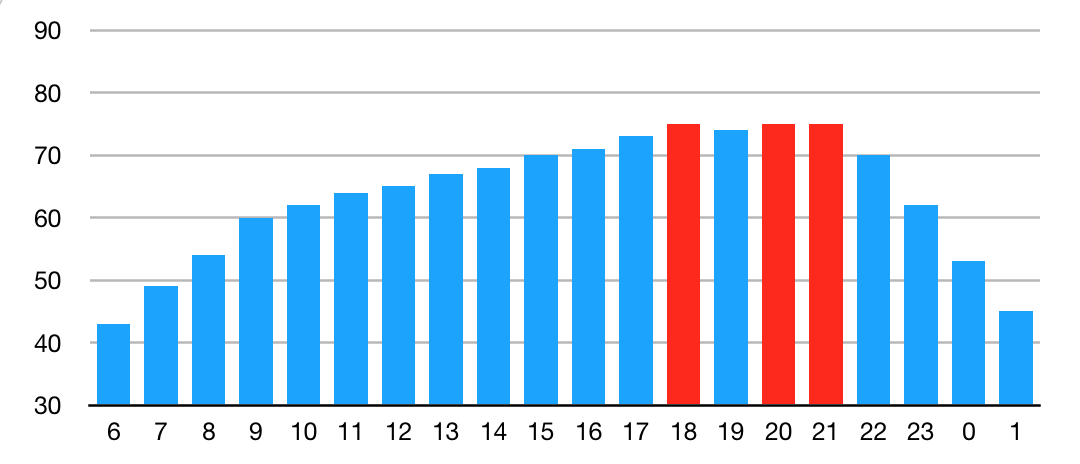

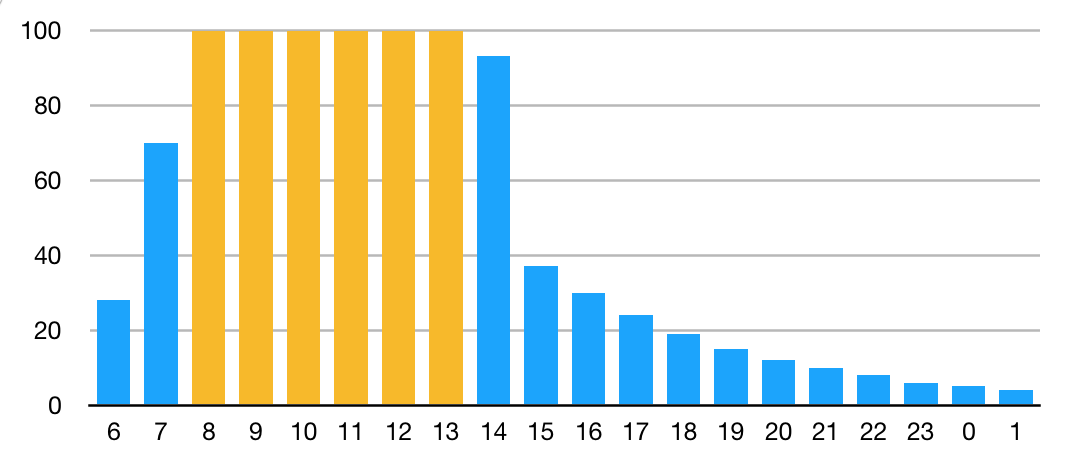

File transfer is often used as an example of a bandwidth elastic application. At short timescales this is true, as the underlying TCP protocol that file transfer protocols such as HTTP and FTP run over adapts to available bandwidth. However, looking at longer timescales, file transfers aren't very elastic: the amount of data sent per second may be reduced, but the same amount of data is still transferred; it just takes longer now. So now the delays between 18:00 - 19:00 carry over to 19:00 - 20:00, which now also reaches the peak. The same for 20:00 - 22:00 into 22:00 - 23:00 and even slightly beyond that.

I've made the first two hours where bandwidth required exceeds bandwidth available yellow, as some delay is tolerable for file transfers. But I've made the next three hours red, indicating that the slowdowns are starting to become unacceptable.

Web browsing

Web browsing would seem like a bandwidth elastic application, as it's built on top of TCP. However, that's only true at very short timescales: when there is a traffic peak that lasts maybe a few tenths of a second, TCP will deal with that without much impact on the user experience. But users keep clicking on links, so new pages need to load promptly, or they'll start leaving the site.

Also, TCP can handle lost packets in the middle of a TCP session pretty well. However, losing the first or last packet of a session can slow things down a lot. The web tends to use many small transfers in parallel, so the risk of lost first and last packets is relatively high and thus it's important to avoid packet loss caused by overloading the network.

The user experience for web browsing with a bandwidth deficit occupies middle ground between the user experience for VoIP and file transfer.

Video streaming

Video can be encoded at very different rates: from "moving postage stamp" type video at something like 100 kbps to 4K resolutions at 25 Mbps or more. If the video stream is encoded at a fixed rate, then video streaming is just as inelastic as VoIP.

However, video streaming typically adjusts the video rate to available bandwidth. At that point, it becomes very elastic, because it limits its bandwidth at short timescales, just like TCP-based applications, but the streaming sessions don't get longer as a result.

In our example when the bandwidth deficit is less than 10%, the user experience probably suffers relatively little.

Aside: software updates

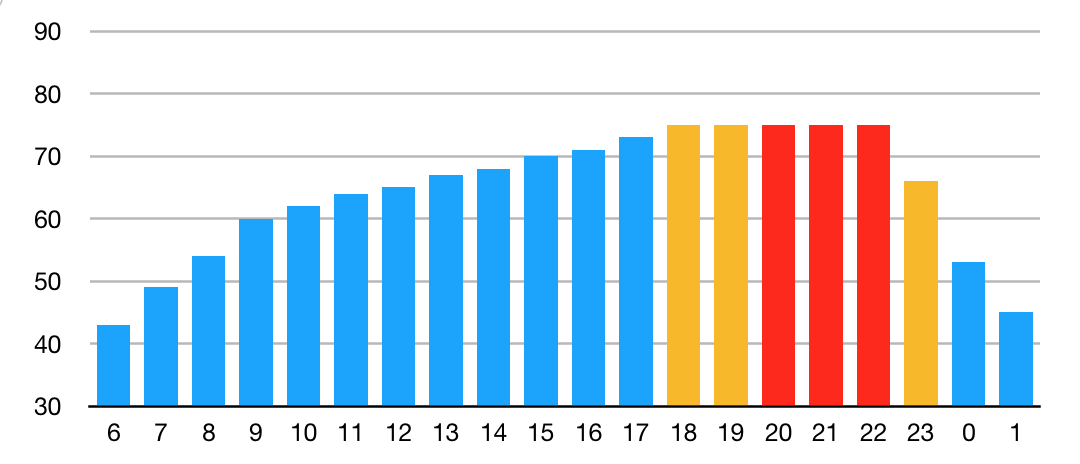

When a software maker releases a big update, especially a Microsoft or Apple operating system update, that generates a lot of traffic. Here, I'm assuming the update is released at 6:00 and then over the next hours, more and more users start downloading, with the peak at 8:00. The bandwidth needed handily exceeds what's available, as shown in red:

As downloads slow down during the three hours with a bandwidth deficit, the peak gets smeared out over three additional hours:

I've made these yellow rather than red indicating that this is not ideal, but acceptable. Users who upgrade immediately expect slower downloads—within reason.

What's very important here is that when the slowdowns get pretty bad so the downloads may get interrupted, the download can resume from where it left. There have been cases where the download had to start again from scratch, increasing the amount of bandwidth required exactly when the network is congested anyway.

So what can we conclude?

First, VoIP leaves no room for error. If a good VoIP experience is important, you need to overdimension your network so even when bandwidth is reduced because of a link failure, you have sufficient bandwidth to accommodate all VoIP traffic.

With file transfers, you can skirt a lot closer to the edge, but in the end you still need to be able to transfer all those files in a reasonable amount of time, so you probably still need to slightly overdimension your network.

The web occupies middle ground between VoIP and file transfers. When you start maxing out available bandwidth, it will help if you use HTTP keepalive and pipelining, which reuse TCP sessions, giving TCP the opportunity to manage bandwidth better. Some news sites have been known to use a version of their website that is smaller at times when they would otherwise run into bandwidth (or server resource) limits.

The good news is that for most video streaming, it's not a big problem when there is a reduction in bandwidth of, say, 25%, due to a link failure. So for this application, there's much less need to overdimension the network.

Permalink - posted 2019-03-18